Update — Sep 9th, 2021

I've worked on documenting my processes for local Odoo development recently. Take a look at How I manage hundreds of local development Odoo projects if you are looking for more local development management techniques. — Holden

This is a follow up to something I wrote about a couple of years ago when I was trying to go through the process of running dozens of applications on the same server. Looking back I wish I would have jumped into Docker sooner, but hindsight is 20/20.

Why Docker?

Docker, in my opinion, is still the only real option for running isolated development systems for a team of engineers using varying operating systems while being able to deploy those isolated systems from a local environment, to a development environment, to a production environment.

You have a few other tools out there like LXC but it’s not diverse enough.

Ultimately with these types of tools (virtualization, containers, virtual machines, etc.), we are just trying to solve a few problems that we run into on development teams.

The Team and Example Project

Think about 10 developers working on a team together to develop an awesome new web application using Python and Postgresql. Between these 10 developers, imagine they use 5 different operating systems. They use Ubuntu, Red Hat, Fedora, Windows, and macOS.

The Python app they are building integrates 3 open source projects behind the scenes running a chat application, queueing software, and a resource monitoring tool that only runs on Linux. The production and staging environments are Linux servers.

How do we get the application running on all 10 developers local environments? It’s possible, but not fun. Some people use virtual machines, some may have to use cloud servers for certain services, some dual boot their systems or use a separate laptop, etc.

And this is actually a common, pretty simple example. Think about more complex examples like a client-service business where each developer has between 5–20 projects on their local system at a time, different programming languages or different versions of 1 programming language, different database systems, different dependencies, etc.

The Team’s Needs

The team needs a development environment that does the following:

- Isolates an operating system at a certain version, per service.

- Isolates all dependencies at a certain version.

- Runs essentially anywhere. Any OS, cloud or physical, production or local.

- Performs well enough for developers to not rip their hair out.

What is Docker?

At this point, it seems that most developers have at least run across Docker. Even if it’s just from looking at open source projects that provide instructions on running with Docker or seeing articles about if you don’t start using containers your living in prehistoric times (Docker is a good tool, but do what’s best for you and don’t bandwagon just for the sake of using the newest shit)

So for people who need a quick summary, let’s run through some different terms:

Virtualization

There are different types of virtualization, but ultimately virtualization means the simulation of computer systems or environments on a single host system, isolated into their own space.

Imagine if you have a generic phone that has 4 buttons on it for iOS, Android, Blackberry, or Microsoft. You could “open up” your iOS system and take a couple of pictures before going back to the Android system to edit those two pictures.

Virtualization, in the simplest use case, let’s you do that with computers. If you are a macOS user who needs to run the newest Office Suite then you can simulate a Windows 10 system from your mac.

Your mac would be considered the host.

The windows system would be considered a guest operating system.

A tool called a hypervisor interfaces with physical hardware and distributes resources to each guest.

Virtual Machines

You may have experience with tools like VirtualBox or VMWare Workstation for virtualization or even local development, but technically Docker does not use the same type of virtualization.

In practice, two factors that stand out with virtual machines, compared to containers, are:

- Virtual Machines always provide a full operating system.

- Virtual Machines take a performance hit because of the extra layers of software placed between the host system (your computer) and the guest system (the operating system running in the virtual machine.)

Virtual machines are good in a way but also bad in a way. It meets some of the teams’ needs but they are not fast and they will not deploy as easily.

There’s a lot of details about resource sharing, networking, and hypervisors, hardware utilization, partitioning, etc. associated with explaining how virtual machines work behind the scenes. VMWare has some good resources out there if you want to dig into those details.

Containers

Finally, something Docker specific. So as you know, Docker does not use Virtual Machines or the definition of virtualization defined by Virtual Machines.

It uses containers. There are quite a few technical differences between containers and virtual machines, but you can visualize containers as micro virtual machines. Docker does not package up the container in the same way that a virtual machine does, with the entire guest operating system kernel, hypervisor, and hardware virtualization tooling, so we can create hundreds of these simple containers and network them together without the overhead of a virtual machine.

Remember that when running containers, they are still an isolated environment.

Your computer is the docker host.

Each container runs as its own “micro virtual machine”. You can treat it like a remote server in a way, by copying files to and from, shelling into the container, networking with the containers IP, etc.

Volumes

A volume is a way to share data between the host (your computer) and a specific container. With each

container being as close to stateless as possible, you can map data inside the container from your

machine. You will see more about this below when we setup our docker-compose.yml

file.

Images

Images are basically compiled containers. Containers are given instructions, defining how to set up the container from scratch, that look like:

FROM odoo:10.0

RUN touch /var/log/odoo.log

RUN git clone https://github.com/me/my_addons.git /opt/odoo/addons/my_addons

Think about these like Chef recipes or server provisioning tools. It's telling Docker how to create a container so that the container is exactly the same for every developer and every environment. These are called Dockerfile’s.

There is a process of building the container from these files, packaging them up as an image, and pushing them to an image repository so that your team or our developers can use them. See hub.docker.com.

Running Odoo On Docker

There’s much, much, much more to learn about docker and ecosystem that revolves around Docker, but hopefully, that gives you a rough idea about the basics.

Let’s get Odoo up and running now.

Installing Docker and Docker Compose

Make sure you have Docker and Docker Compose installed. For Linux based system, you can use your package manager:

# Using Ubuntu / Debian

$ sudo apt install docker docker-compose

# Using Redhat

$ sudo apt install docker docker-compose

Creating Our Docker Compose File

Without using docker, we know that we need a certain set of steps to create our local environment for Odoo development:

All of that has been baked into a template for us already as a docker image (see the Dockerfile for the steps followed). All we need to do is reference the docker hub image.

1. Make a folder on your system, which will become your project environment:

$ mkdir -p ~/projects/odoo-docker

$ cd ~/projects/odoo-docker

$ touch docker-compose.yml

$ mkdir ./config && touch config/odoo.conf

$ mkdir ./addons

2. Add the ./docker-compose.yml contents:

I’ve created the sample docker-compose.yml file below. I haven’t talked about docker compose in

this article yet, but it’s an additional tool created by docker to allow you to orchestrate multiple containers

together. In the case of Odoo, we need the web application container but also the postgresql database container.

version: '3.3'

services:

# Web Application Service Definition

# --------

#

# All of the information needed to start up an odoo web

# application container.

web:

image: odoo:12.0

depends_on:

- db

# Port Mapping

# --------

#

# Here we are mapping a port on the host machine (on the left)

# to a port inside of the container (on the right.) The default

# port on Odoo is 8069, so Odoo is running on that port inside

# of the container. But we are going to access it locally on

# our machine from localhost:9000.

ports:

- 9000:8069

# Data Volumes

# --------

#

# This defines files that we are mapping from the host machine

# into the container.

#

# Right now, we are using it to map a configuration file into

# the container and any extra odoo modules.

volumes:

- ./config:/etc/odoo

- ./addons:/mnt/extra-addons

# Odoo Environment Variables

# --------

#

# The odoo image uses a few different environment

# variables when running to connect to the postgres

# database.

#

# Make sure that they are the same as the database user

# defined in the db container environment variables.

environment:

- HOST=db

- USER=odoo

- PASSWORD=odoo

# Database Container Service Definition

# --------

#

# All of the information needed to start up a postgresql

# container.

db:

image: postgres:10

# Database Environment Variables

# --------

#

# The postgresql image uses a few different environment

# variables when running to create the database. Set the

# username and password of the database user here.

#

# Make sure that they are the same as the database user

# defined in the web container environment variables.

environment:

- POSTGRES_PASSWORD=odoo

- POSTGRES_USER=odoo

- POSTGRES_DB=postgres # Leave this set to postgres

3. Add the odoo.conf to ./config/odoo.conf which will be mapped inside the container using volumes.

This is a very simple and fairly standard odoo configuration. The only part that may be confusing is the db_host

. With docker, it will understand references to the container name (see in the docker-compose.yml above where

we named our postgres container db). So it finds the proper IP for that container based on

the name.

[options]

admin_passwd = my_admin_password

# |--------------------------------------------------------------------------

# | Port Options

# |--------------------------------------------------------------------------

# |

# | Define the application port and longpolling ports.

# |

xmlrpc_port = 8069

# |--------------------------------------------------------------------------

# | Database Configurations

# |--------------------------------------------------------------------------

# |

# | Database configurations that setup Odoo to connect to a

# | postgresql database.

# |

db_host = db

db_user = odoo

db_password = odoo

db_port = 5432

4. Run the containers

You can start up the container by running:

$ docker-compose up

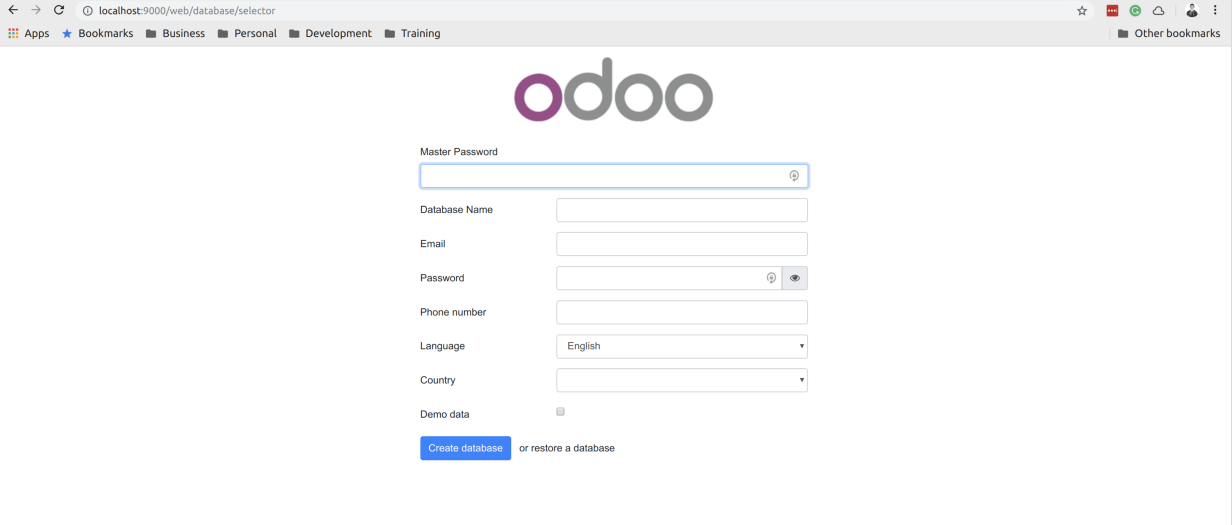

You will see the output from the database and the odoo process. Once it has completed start up then you can access your instance at http://localhost:9000.

And that’s it. Congrats. You have a local Odoo environment running inside docker containers.

Notes On Workflow And Management

This is just a simple tutorial on getting started with docker and Odoo, but there’s a lot you can do from here to make the workflow for you and your team a smoother process.

Get Used To The Daily Workflow

We started up the container above with a simple command:

$ docker-compose up

And we can then stop it with Ctrl+C. But make sure to review the docker and

docker-compose CLI tools to see everything that it can do:

# Start containers up in the background

$ docker-compose up -d

# Restart containers

$ docker-compose restart

# Stop containers

$ docker-compose stop

# Destroy containers (WARNING: This could mean data inside those containers as well)

$ docker-compose down

# Show container processes

$ docker ps

$ docker ps --filter name={container_name}

# Show container process stats

$ docker stats

# SSH into a container

$ docker exec -it {container_id} sh

$ docker exec -it $(docker ps -q --filter name={container_name}) sh

$ docker exec -u {user} -it {container_id} sh

$ docker exec -u root -it {container_id} bash

# Copy contents to container from host

$ docker cp ~/my/file {container_id}:~/myfile

# Copy contents from container to host

$ docker cp {container_id}:~/myfile ~/my/file

# Tail the process from a container

$ docker logs -f {container_id}

Some Ideas On What To Do Next

- Create a project

makeprocess. If you can get to the point where any developer cangit pullyour project, run a single script or command, and have the application up and running. That’s ideal. With a setup like this, you could for example just add amake.shscript in the root of the project which would download any addons that you need. - Build your own docker image. You could have your own

my/odoo:12.0docker image which you reference from the docker-compose.yml file and it has all the dependencies and tools that you always use configured and ready to go. - Auto-deploy your docker containers on repository push.

- Automatically download sample or pre-seeded databases on container run.

Do whatever is best for you and your team.

Thanks For Reading

I appreciate you taking the time to read any of my articles. I hope it has helped you out in some way. If you're looking for more ramblings, take a look at theentire catalog of articles I've written. Give me a follow on Twitter or Github to see what else I've got going on. Feel free to reach out if you want to talk!